Apache Spark Write for Us

Apache Spark Write For Us – And also, today some of the world’s biggest firms are leveraging the power of Apache Spark to speed extensive data operations. Organizations of all sizes rely on big data, but processing terabytes of datafor real-time application can become cumbersome. So can Spark’s blazing fast performance benefit your organization?

What is Apache Spark?

Apache Spark is an ultra-fast, distributed framework for large-scale processing and machine learning. In addition, Spark is infinitely scalable, making it the trusted platform for top Fortune 500 companies and even tech giants like Microsoft, Apple, and Facebook.

However, spark’s advanced acyclic processing engine can operate as a stand-alone install, a cloud service, or anywhere popular distributed computing systems like Kubernetes or Spark’s predecessor, Apache Hadoop, already run.

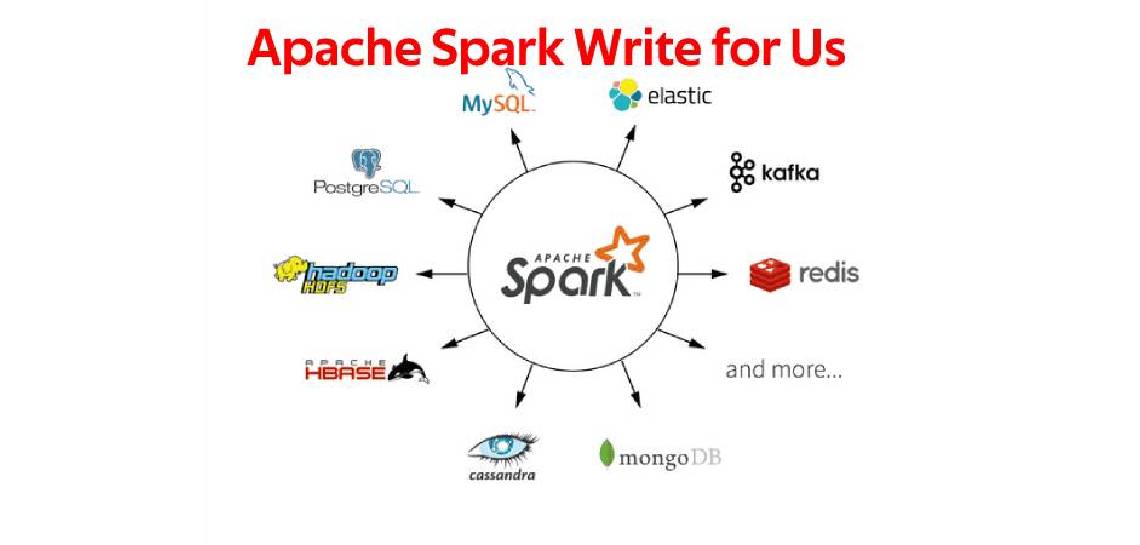

Apache Spark generally requires only a short learning curve for coders used to Java, Python, Scala, or R backgrounds. And also, as with all Apache applications, Spark support by a global, open-source community and integrates easily with most environments.

And also, below is a brief look at the evolution of Apache Spark, how it works, the benefits it offers, and how the right partner can streamline and simplify Spark deployments in almost any organization.

From Hadoop to SQL: The Apache Spark Ecosystem

Like all distributed computing frameworks, Apache Spark works by spreading massive computing tasks to multiple nodes, broken down into smaller tasks that process simultaneously.

But Spark’s groundbreaking, in-memory data engine gives it the ability to perform most compute jobs on the fly, rather than requiring multi-stage processing and multiple read-and-write operations back and forth between memory and disk.

However, this critical distinction enables Spark to power through multi-stage processing cycles like those used in Apache Hadoop up to 100 times faster. Therefore, its speed, plus an easy-to-master API, has made Spark a default tool for major corporations and developers.

Apache Spark vs. Hadoop and Map Reduce

However, it’s essential to recognize that not every organization needs Spark’s advanced speed. And also, Hadoop already uses Map Reduce to accelerate distributed processing and crunch data sets up to a terabyte incredibly fast. It does this by simultaneously mapping parallel jobs to specific locations for processing and retrieval, reducing returned data by comparing sets for duplicates and errors and delivering “clean” information.

Map Reduce performs these jobs so quickly that only the most data-intensive operations are likely to require the speed Spark enables. And also, some of these include:

Social media services

Telecom

Multimedia streaming service providers

Large-scale data analysis

Because Spark builds to work with and run on the Hadoop infrastructure, the two systems work well. As a result, fast-growing organizations built-in Hadoop can easily add Spark’s speed and functionality as needed.

The Benefits of Apache Spark

However, for companies that rely on big data to excel, Spark comes with a handful of distinct advantages over competitors

Speed — As mentioned, Spark’s speed is its most famous asset. Spark’s in-memory processing engine is up to 100 times faster than Hadoop and similar products, which require read, write, and network transfer time to process batches.

Fault tolerance — The Spark ecosystem operates on fault-tolerant data sources. Hence, batches work with data known to be ‘clean.’ But when streaming data interacts with references, an additional layer of tolerance is needed. Spark replicates streaming data to diverse nodes in real-time and achieves fault tolerance by comparing remote streams to the original stream. In this way, Spark incorporates high reliability for even live-streamed data.

Minimized hand-coding — Spark adds a GUI interface that Hadoop lacks, making it easier to deploy without extensive hand-coding. Though sometimes manual customization best suits application challenges, the GUI offers quick and easy options for everyday tasks.

Usability — Spark’s core APIs are compatible with Java, Scala, Python, and R, making it easy to build and scale robust applications.

Active developer community — Industry giants like Hitachi Solutions, TripAdvisor, and Yahoo have successfully deployed the Spark ecosystem at a massive scale. In addition, a global support and development community backs Spark and routinely improves builds.

Guidelines of the Article – Apache Spark Write for Us.

- The author cannot be republished their guest post content on any other website.

- Your article or post should be unique, not copied or published anywhere on another website.

- The author cannot be republished their guest post content on any other website.

- You cannot add any affiliates code, advertisements, or referral links are not allowed to add into articles.

- High-quality articles will be published, and poor-quality papers will be rejected.

- An article must be more than 350 words.

- You can send your article to contact@marketing2business.com

How to Submit Your Articles?

To submit guest posts, please study through the guidelines mentioned below. You can contact us at contact@marketing2business.com

Why Write for Marketing2Business – Apache Spark Write for Us

- If you write to us, your business is targeted, and the consumer can read your article; you can have huge exposure.

- This will help in building relationships with your beleaguered audience.

- If you write for us, the obvious of your brand and contain worldly.

- Our presence is also on social media, and we share your article on social channels.

- You container link back to your website in the article, which stocks SEO value with your website.

Search Terms Related to Apache Spark Write for Us.

- Apache Spark

- Big Data Processing

- Cluster Manager

- Data Parallelism

- Dataflow

- Dataframes

- Deprecated

- Distributed Computing

- Fault Tolerance

- Graphx

- In-Memory Computing

- Interface

- Mllib

- Multiset

- Open-Source

- Paradigm

- Resilient Distributed Datasets (Rdds)

- Spark Cluster Manager

- Spark History Server

- Spark Jobserver

- Spark Mesos

- Spark Pipelines

- Spark REST Server

- Spark SQL

- Spark Standalone

- Spark Streaming

- Spark Thrift Server

- Spark Web UI

- Spark Yarn

- Working Set

Search Terms for Apache Spark Write for Us

Apache Spark Write for us

Guest Post Apache Spark

Contribute Apache Spark

Apache Spark Submit post

Submit an article on Apache Spark

Become a guest blogger at Apache Spark

Apache Spark writers wanted

Suggest a post on Apache Spark

Apache Spark guest author

You can contact us at contact@marketing2business.com

Related pages

Accountant Write For Us

Affinity Marketing Write For Us

Business Finance Write For Us

Content Marketing Write For Us

Blockchain Write For Us

Entrepreneur Write For Us